As a key layer of technology in any organization’s security infrastructure, visual AI weapon detection has become a game-changer in the fight against gun violence. This innovative technology can be easily integrated into any CCTV system or any existing camera with an IP connection, turning it into a 24/7 monitor for various types of guns, including pistols, rifles, semi-automatic weapons, and other weapons. A camera equipped with this technology can identify a gun the instant it is brandished, leading to a swift and robust response to mitigate the threat and save lives. This is why it’s being installed in hundreds of organizations across the country, ranging from schools, restaurants, hospitals, enterprises, places of worship, retail stores, sporting events, train stations, and concert venues.

High-Level Advantages

- Optical AI weapon detection is a key layer of technology in an organization’s security infrastructure.

- This technology has become a game-changer against gun violence because it can identify a weapon the instant it is brandished.

- The software works with cameras already in place that have an IP connection.

- Organizations across the country are deploying this technology at a rapid rate to fight growing gun violence and mass shootings.

From AI Winter to Visual Revolution: The Story Behind AI Weapon Detection Technology

To understand how AI can now detect weapons in real-time, we need to travel back to a time when artificial intelligence was considered a dead end—a period researchers call the “AI Winter.”

The Dark Ages of AI (1970s-1990s)

By the 1980s, the initial excitement around AI had frozen into disappointment. Early neural networks, first conceived in the 1940s, had hit seemingly insurmountable walls. Computers were too slow, datasets too small, and the mathematics too limited. Funding dried up and researchers abandoned the field. The dream of teaching machines to see and understand the world seemed like science fiction. There were two major “AI winters”—periods of reduced funding and waning interest. The first occurred in the mid-1970s to early 1980s, and the second from the late 1980s through the mid-1990s. Early neural networks, first conceived in the 1940s by McCulloch and Pitts, had hit seemingly insurmountable computational and theoretical walls. The infamous 1969 book “Perceptrons” by Marvin Minsky and Seymour Papert had mathematically proven the limitations of simple neural networks, effectively ending research funding for decades.

But a few persistent scientists kept the flame alive. Geoffrey Hinton at the University of Toronto, Yann LeCun at Bell Labs (and later NYU), and Yoshua Bengio at the University of Montreal continued working on neural networks when almost everyone else had given up. These three would later become known as the “godfathers of deep learning,” sharing the 2018 Turing Award for their foundational contributions.

The First Sparks: Machines That Drive

The renaissance began in an unlikely place: the Nevada desert. In 2004 and 2005, DARPA launched the Grand Challenge—a race where autonomous vehicles had to navigate 150 miles of treacherous desert terrain without human intervention. The first year was a spectacular failure; no vehicle completed the course.

But by 2005, the second Grand Challenge saw a breakthrough. Stanford’s “Stanley,” led by Sebastian Thrun, completed the 132-mile course in 6 hours and 53 minutes, winning the $2 million prize. This wasn’t just about self-driving cars—it demonstrated that machines could process visual information and make split-second decisions in complex, real-world environments. The excitement around “machine learning” was beginning to grow.

The Competition That Changed Everything: ImageNet 2012

The breakthrough moment came in October 2012, at an academic competition that most people had never heard of, the ImageNet Large Scale Visual Recognition Challenge (ILSVRC). This academic competition posed a deceptively simple question: could computers identify objects in photographs as well as humans can?

For years, progress had been glacial. Traditional computer vision methods were stuck. The best traditional computer vision methods achieved a top-5 error rate of 26.2% on the ImageNet dataset—meaning they failed to correctly identify objects in about one in four images, far from human-level performance.

Then Alex Krizhevsky, a graduate student at the University of Toronto working with Geoffrey Hinton and Ilya Sutskever, submitted something extraordinary. Their deep convolutional neural network, dubbed “AlexNet,” didn’t just beat the competition; it demolished it. AlexNet achieved a top-5 error rate of just 15.3%—a reduction of over 40% compared to the previous best method. It was a quantum leap that stunned the computer vision community.

The Secret Sauce: Deep Learning Unleashed

What made AlexNet special wasn’t just its accuracy—it was how it achieved that accuracy. Instead of programmers hand-coding rules about what objects should look like, AlexNet “learned” to see on its own. It was a Convolutional Neural Network (CNN) with eight layers (five convolutional and three fully connected) that could automatically discover visual patterns.

How Computers Learn to See

But what does it actually mean for a computer to “see” (computer vision) something? Computers process images as arrays of numbers representing pixel intensities. When you show a computer a photo of a cat, it doesn’t see whiskers and fur; it sees millions of numerical values ranging from 0 to 255, each representing the brightness of red, green, and blue light at each tiny point in the image.

AlexNet’s breakthrough was teaching computers to find meaningful patterns in these numerical arrays. Just as a child learns to recognize cats by seeing thousands of examples and gradually understanding what makes a cat different from a dog, AlexNet was “trained” by being shown 1.2 million labeled images and learning to associate certain numerical patterns with specific objects.

The learning process works through trial and error, repeated millions of times. Initially, AlexNet made random guesses about what it was seeing. When it guessed correctly—say, identifying a photograph as containing a cat—the network received positive feedback that strengthened the mathematical connections that led to that correct answer. When it guessed wrong, those connections were weakened. Through this process, repeated across millions of examples, the network gradually learned that certain combinations of edges, textures, and shapes reliably indicated the presence of specific objects.

The eight layers of AlexNet work like a hierarchy of recognition. The first layers detect simple features like edges and corners—the visual equivalent of recognizing that lines and curves exist in the image. Middle layers combine these simple features into more complex patterns like textures and shapes—perhaps recognizing that certain edge combinations form whiskers or pointed ears. The final layers combine these complex features into full object recognition—understanding that the presence of whiskers, pointed ears, and specific textures together indicates “cat.”

This hierarchical learning is what makes deep learning so powerful for visual tasks like gun detection. The same principles that taught AlexNet to distinguish cats from dogs can teach modern systems to distinguish firearms from cell phones, even in complex, real-world security footage.

The breakthrough was powered by three crucial ingredients that had finally come together:

Massive Data: ImageNet provided 1.2 million labeled training images across 1,000 object categories—a scale of data never before available for machine learning.

Raw Computing Power: Graphics processing units (GPUs), originally designed for video games, turned out to be perfect for the parallel computations needed to train neural networks. AlexNet was trained on two NVIDIA GTX 580 GPUs.

Algorithmic Breakthroughs: Innovations like ReLU (Rectified Linear Unit) activation functions, dropout regularization, and data augmentation solved problems that had plagued neural networks for decades.

From Photos to Life-Saving Detection

AlexNet’s victory triggered what researchers now call the “deep learning revolution.” Within months, tech giants were scrambling to hire the few researchers who understood these methods. By 2015, systems like Microsoft’s ResNet could recognize objects better than humans on ImageNet. By 2017, real-time object detection systems could identify and locate multiple objects in video streams in milliseconds.

This is the technology that now powers visual gun detection. The same neural network principles that learned to distinguish between 1,000 object categories in ImageNet can now distinguish firearms from benign objects in security footage. The system that started as an academic exercise in photo recognition has become a guardian that never sleeps, never blinks, and can spot a weapon faster than any human security guard.

The journey from the AI winter to today’s gun detection systems is a testament to the power of persistence, the importance of fundamental research, and the unpredictable ways that breakthrough technologies emerge.

How Visual AI Gun Detection Works

Visual AI gun detection technology leverages advanced AI software to recognize firearms. The AI accurately identifies guns across real-world environments — from a firearm resting on a table to one held in-hand while an assailant is in motion, whether outdoors on a sunny day or inside in a dark hallway.

One of the leading visual AI gun detection systems is Omnilert Gun Detect. This system employs a proprietary, multi-step process to determine whether or not a gun represents a threat. The process involves detecting a human, recognizing a gun, and then identifying across successive video frames that the gun is being held and moved.

- ASSESS: It first assesses frames of video to find points of interest. We use the presence of a person within the field of view as our first point of interest. We use an AI object detector to identify a body – a torso, arms, legs, etc. It’s not looking for specific people or trying to identify a person, just the shape of a body without reference to color, gender, or other identifying personal attributes.

- DETECT: A secondary AI object detector then operates on the first point of interest, searching for a handgun or long gun in close proximity to the body. A wide range of handguns, shotguns, rifles, and military-style weapons are distinguished by the AI while inert objects such as cell phones, hand tools, common office objects, and more are identified to diminish possible false positives.

- ANALYZE: Finally, through a proprietary process, multiple frames of video are analyzed in sequence to establish a coherent track on the threat, reducing spurious or false detections. Additionally, the relationship of the gun to the arm and hand of the body is analyzed to bring additional clarity to the situation and help determine, with a high level of confidence, if an actual gun detection is, in fact, a threat.

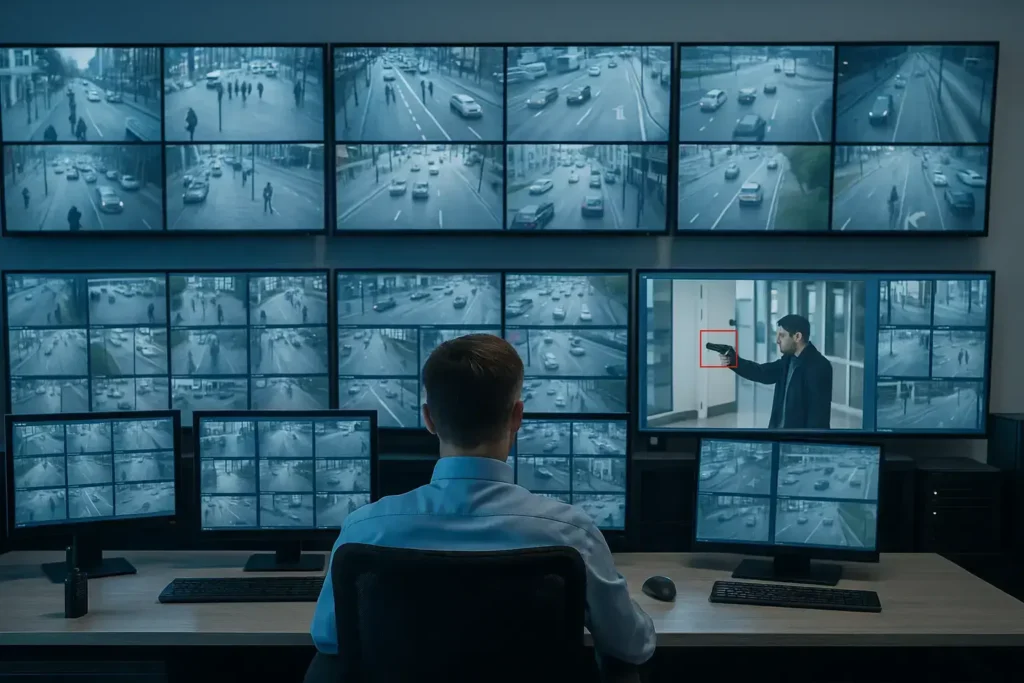

The Importance of Alerts with Images and Video

When a camera with visual AI weapon detection identifies a firearm, it sends a notification of the threat to those assigned to receive these alerts. Sometimes this is an outside monitoring service but could also be internal security personnel or the local police departments. Each organization gets to decide where these notifications would be best handled, and this is determined when the software is installed.

Once the person (or persons) has been assigned as the recipient of notification, their next steps are critical because they are the first line of all detections. The most common method used is to send an image or single frame of video of the suspect with an annotation, usually a box, around the suspected weapon. This image is sent to a phone, desktop computer or other device for human verification. The problem with this approach is that one single image can only provide so much intelligence. Depending on lighting and camera quality, it can be difficult to discern if it is a valid threat or not accurately.

In contrast, visual AI weapon detection technologies such as Omnilert‘s AI Gun Detection solution send a video clip in addition to the still image showing the potential threat with more context, including the seconds before and after the initial gun detection. While a picture may tell a thousand words, a video can provide vital context, telling you exactly what is going on and giving the situational intelligence needed to make a confident lockdown or leave alone decision.

Detecting the Gun Is Just the First Step

What happens in the moments after a weapon is identified is often the difference between whether lives are lost or saved. An active shooter incident is a high stress event where people, even when trained to protect, can become scared or make mistakes. That is why it’s important to automate the entire response. For example, unique to the Omnilert system, once an alert is sent to the designated person, they can click one simple button to carry out a full range of pre-planned responses. These could include:

Notifying the People at Risk

- Sending Text Messages & Desktop Alerts – Immediate alerts are sent via SMS, email and desktop notifications to inform students, employees, and anyone in the affected area.

- Making Audible Alarms & PA Announcements – Loudspeaker systems can be activated to deliver real-time instructions, directing people to safety.

- Posting Website Updates & Digital Signage—Emergency information can be automatically updated on school and workplace websites and digital bulletin boards.

Locking Down the Building for External Threats

- Automated Door Locking – Visual AI gun detection is often integrated with access control systems to lock doors automatically, preventing the shooter from moving freely.

- Elevator & Exit Controls – Security protocols can be triggered to restrict elevator access or guide individuals to designated safe exits.

- Mobilizing First Responders

Instant Notifications to Law Enforcement

– Local police and emergency responders are notified immediately with real-time intelligence.

- Geolocation Data – Security teams receive the exact location details of the detected firearm, allowing for a faster and more precise response.

- Establishing Unified Command & Collaboration

- Instant Web or Video Conference – Visual AI gun detection systems can be configured to automatically set up an emergency unified command center with most conference services, including Zoom, Microsoft Teams, or Google Meet, allowing law enforcement, security teams, and school or business administrators to collaborate in real-time.

- Information Sharing– Launching a meeting provides a live overview of the situation, including video footage, communication logs and emergency status updates.

How Training Affects the Accuracy of Visual AI Weapon Detection

For anyone looking at deploying visual AI weapon detection, it’s important to understand how the AI models being used were created. This determines the accuracy of any given system in detecting a weapon.

The most common ways to train an AI model include an organic, synthetic or hybrid approach. The difference between the three comes down to whether the model was trained on “real life” scenarios or “pretend.”

- Organic Data Centric AI Data Training – This method uses raw video footage captured directly from security cameras in various real-world indoor and outdoor settings such as schools, hospitals and busy public environments from around the world. It reflects the genuine complexities and nuances of everyday environments – from changing lighting conditions to diverse human behaviors. Organic data captures the unpredictability of real-life scenarios that a gun detection system must navigate, such as understanding how different firearms might appear in various conditions. This type of training data enables the visual AI gun detection system to recognize threats more accurately, reducing the chance of false positives and missed gun detections.

- Synthetic Model Centric AI Data Training – This training approach leverages only computer-generated data created using advanced 3D animation and rendering techniques. This method is helpful and useful when real-life data is limited. It can “kick start” a project and augment a dataset with images that are similar to what would be encountered in real-life. The problem with synthetic data is that it does not fully replicate the intricacies of real-world environments. Additionally, each frame of a computer-generated video is “perfect”, meaning it lacks the imperfections found in real camera streams, leading to less accurate systems that don’t scale.

- Hybrid Solutions – This method involves using real camera footage recorded against green screens to alter backgrounds and synthetically swap out environments digitally. Green-screening is a process whereby several cameras may be set up in a studio looking at a subject on a green backdrop and green floor. This method is insufficient for production systems because it inherently cannot match the real-world lighting, camera sensitivity and artifacts that the camera will typically produce.

Training with organic data will always win over synthetic or hybrid because it models real life situations. This will ultimately make it more dependable in a critical situation like an active shooter event. We break this down into more detail in our Organic vs Synthetic AI Training Article.

Designed to Protect Privacy Rights

While visual AI weapon detection can be used in any public environment, one of the most popular use cases is for guns and specifically deployments within schools. With more than 330 gun violence incidents at U.S. schools in 2024, schools are actively enhancing their security to keep both students and staff safe from these types of threats. However, schools in particular are also concerned with ensuring personal privacy. That is why many visual gun detection systems have been designed to focus solely on detecting firearms without identifying individuals. There is no use of facial recognition on subjects being monitored, and if they choose, the video feeds never leave the school premises.

See it for Yourself!

To see a demonstration of visual AI gun detection, click on this link. This video includes an overview and demonstration while also covering requirements and next steps.

Key Takeaways

- Visual AI gun detection is a game-changer against gun violence.

- The software can be installed in existing IP-based cameras, enabling organizations to protect the investment they have already made in video surveillance.

- Advancements in deep learning drove the introduction of key AI technologies such as visual AI gun detection.

- Training models organically ensures a higher level of accuracy compared to training synthetically or via a hybrid (green screen) approach.

Frequently Asked Questions (FAQs)

What is visual AI weapon detection?

Leveraging advanced AI techniques, visual AI weapon detection can detect a weapon in a fraction of a second. Once a threat is verified, the system can then instantly activate a response that includes dispatching police, locking doors, sounding alarms and automating other responses to notify those in harm’s way and save lives.

At a high-level, how does visual AI weapon detection work?

The best visual AI gun detection technologies use a multi-step process to determine whether a weapon represents a threat. These steps include assessing, detecting and then analyzing.

How should models used in visual AI weapon detection be trained?

Models should always be trained using organic data through real world customer deployments or raw video footage captured directly from security cameras in various real-life settings around the world. This ensures that the software can learn the genuine complexities and nuances of everyday environments such as changing lighting conditions to specific human behaviors.

Is visual AI weapon detection hard for an organization to deploy?

No, visual AI gun detection has been designed to work with any existing camera that has an IP connection.

What types of weapons can visual AI weapon detection software identify?

Visual AI weapon detection software can identify various weapons depending on what it was designed to detect. The most common use case today is for guns, specifically identifying firearms ranging from handguns to long guns and everything in between.

Is visual AI gun detection the primary technology used to fight gun violence and mass shooters?

No, AI gun detection is a key layer of technology in an organization’s overall security infrastructure, but it is not meant to be used alone. This infrastructure may include other technologies such as metal detectors, automatic door locks, onsite security, and bulletproof windows, to name a few. Deploying layers of technologies or strategies is the most effective method for keeping people safe from gun threats.

Sources:

- Russell, S., & Norvig, P. (2020). *Artificial Intelligence: A Modern Approach* (4th ed.). Pearson.

- McCulloch, W. S., & Pitts, W. (1943). A logical calculus of the ideas immanent in nervous activity. *Bulletin of Mathematical Biophysics*, 5(4), 115-133.

- Minsky, M., & Papert, S. (1969). *Perceptrons: An Introduction to Computational Geometry*. MIT Press.

- Schmidhuber, J. (2015). Deep learning in neural networks: An overview. *Neural Networks*, 61, 85-117.

- ACM. (2018). 2018 ACM A.M. Turing Award Recipients: Yoshua Bengio, Geoffrey Hinton, and Yann LeCun. Retrieved from https://awards.acm.org/about/2018-turing

- DARPA. (2004). DARPA Grand Challenge 2004 Final Report. Defense Advanced Research Projects Agency. Retrieved from https://www.esd.whs.mil/Portals/54/Documents/FOID/Reading%20Room/DARPA/15-F-0059_GC_2004_FINAL_RPT_7-30-2004.pdf

- Thrun, S., et al. (2006). Stanley: The robot that won the DARPA Grand Challenge. *Journal of Field Robotics*, 23(9), 661-692.

- Russakovsky, O., et al. (2015). ImageNet Large Scale Visual Recognition Challenge. *International Journal of Computer Vision*, 115(3), 211-252.

- Krizhevsky, A., Sutskever, I., & Hinton, G. E. (2012). ImageNet classification with deep convolutional neural networks. *Advances in Neural Information Processing Systems*, 25, 1097-1105.

- Deng, J., et al. (2009). ImageNet: A large-scale hierarchical image database. *IEEE Conference on Computer Vision and Pattern Recognition*, 248-255.

- He, K., et al. (2016). Deep residual learning for image recognition. *IEEE Conference on Computer Vision and Pattern Recognition*, 770-778.

- Redmon, J., et al. (2016). You only look once: Unified, real-time object detection. *IEEE Conference on Computer Vision and Pattern Recognition*, 779-788.